June 24, 2009

The quick and the stupid? Or the clever and the slow?

Do students who finish tests quickly score better or worse? This is an interesting question for educators. For good reason there is an implicit bias towards the idea that if it's done quicker, for the same grade/mark, it's better. Yet there is a time and a place for being quick, and a time and a place for being more considered about your answers.

I had an opportunity to do some "research" on this recently. A colleague and I gave an end-of-semester test to 91 100-level (freshman) students in our American history survey. Students had up to 50 minutes to answer 70 questions, with a range of formats including short answer, multiple choice, and identifications. From our mid-semester test we had a fair idea that the median time to completion would be about 40 minutes. Our goal was a test where the challenge was the content, not rushing to finish.

Because both my colleague and I were heading out-of-town shortly after the test, the students answered the test on a single side of paper each. We collected the paper in a box at the end, and then ran all 91 tests through the scanner. This numbered the pages automatically, and all I had to do after we'd marked the tests was rank the scores on the test in Stata (when you have a hammer, everything looks like a nail).

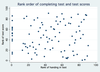

My prior belief before seeing the data was that there might be a U-shaped relationship between the ranks of completion and handing in. Students who did well would either be quick or slow, tortoises or hares winning the race in different ways. Of course, one could also have the prior belief that an inverse U-shaped relationship would hold for the students who did poorly. Some would complete quickly, either realising they didn't know anything or just rushing through the test to go [insert prejudice about under-motivated students here], while others would do poorly through failing to complete all the questions.

By way of explanation in interpreting the graph, a lower rank on completion means the student waited longer to hand in their test. The vertical line on the left side of the graph is the 12 students who all handed in their tests at the very end when we called "time". A lower rank on the test score is a worse score.

What appears to happen is that there is no discernible relationship between when students handed in their test, and the mark they received. A moving average gives us a slightly different perspective.

Recall that a lower rank of handing in the test means students waited longer, and note that the overall mean for the test was a score of 51.3 out of 75 (68.4%, a B on our grade scale).

A moving average forward and back 5 observations shows how student performance varied with submission. The 12 students who waited right until the end to submit had a slightly higher average than the grand mean for the class, but nothing that approached statistical significance. There isn't strong evidence that the slower students are more careful and thus scoring higher.

The average rises towards the middle of the order of tests being submitted, and then falls back towards the overall mean. But note what this last fact shows, the students who finish the test earliest are not doing any worse than average. At least in this class on this test, the students who finished early were not rushing to slack off.

In conclusion, there is some relationship between time to complete the test and scores, but it is not an obvious one.

Posted by eroberts at June 24, 2009 12:59 PM